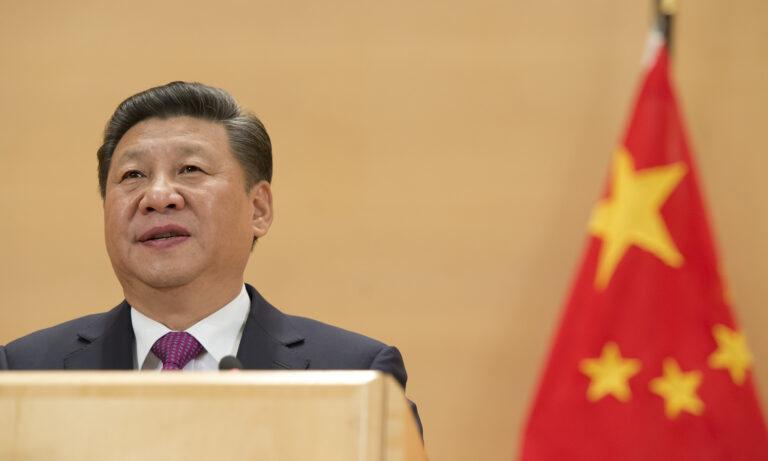

As Xi aims to tame the algorithm, his vision is an interesting mirror for the world.

“We must use mainstream values to steer algorithms,” Xi Jinping proclaimed in 2019. Since then, China became the first country worldwide to successively regulate recommendation algorithms, deepfakes, and more recently, also became a first-mover in issuing draft measures on generative AI.

The regulations are each relatively blunt, patchwork and ad-hoc responses to new advances in information technologies, but likely to have a profound effect. They draw from a comprehensive ideological framework about how to regulate algorithms for online expression. A closer look at this framework helps explain how Xi attempts to make the new AI revolution suit online content management and how it may affect Europe.

Regulating Information as a Public Good

In the eyes of Beijing, information is a public good and must be regulated as such. Content algorithms must, therefore, serve the interests of the public – as defined by the Communist Party – first. Like in much of the Western world, filter bubbles are one crucial concern. But more specifically to China, this is also because they could create echo chambers challenging officially-endorsed values. This could call into question narratives like those promoting childbirth in the wake of China’s declining population. Authorities equally endorse the paternalistic view that citizens need to be protected from content appealing to primal instincts, like obscenity. Finally, “false information”, either in the objective sense of the term or referring to politically-undesired information, needs to be filtered out outright.

As early as 2019, China’s cyberspace authorities consolidated their vision for the online ecosystem – and by extension, the role algorithms must play. The Chinese approach differentiates three types of information: illegal information, negative information, and positive information.

Although the boundaries between the categories are far from absolute, the essence is as follows. Illegal information covers everything from sexually explicit content to “false information” or content harming “national security.” Citizens can be criminally charged for disseminating such content. Negative information includes vulgarity and sensationalized content, but also content “violating social mores.” Individual citizens typically will not encounter trouble if they post this, but professional content creators and platforms can lose licenses if they fail to “resist” such content. Finally, positive information is content reflecting positively on the Party, content that shows off Chinese culture, or “teaches taste, style, and responsibility”. Platforms and producers are required to promote such content.

Censorship, outright deletion of information, is but one part of the equation. Yet, alone, it cannot promote “positive information” – it creates a void but cannot fill it. This is where algorithms come in. Xi wants algorithms to convey specific values in the content they generate or recommend. Not the values of commercial companies, who would love to see people addicted to their platforms as much as possible, but those of the party-state. In other words, algorithms must make sure “positive information” flourishes and the rest withers.

However, not all of this is political. Regulations prescribe that algorithms must have extra safeguards to prevent harm to minors. This, among others, refers to viral but dangerous internet challenges like the 2012 “Cinnamon Challenge” in the US. Strict regulation like this is why Douyin – the counterpart of TikTok for the Chinese market – contains very different content for children than its international sibling. Other provisions require conspicuous labeling of AI-generated content, special features for the elderly, and measures to prevent harm to users’ mental health.

Matryoshka-doll Responsibility

Within this system, every citizen or platform is responsible for the information they host and transmit. It resembles a matryoshka doll: app stores are responsible for the apps they distribute, these apps are responsible for the content they host, group chat moderators for the content in their groups, and so on. In this way, a platform becomes legally liable if its algorithms recommend criticisms of Xi Jinping to users, not just the creator of that information. Former US President Trump, known for his China-sceptic stance, unironically sought to introduce similar legislation during his time in office.

Next to the sheer will to insert values into algorithmic design, this points at other fundamental differences with the European Union’s approach to tech. In China, regulators judge platforms not just on the basis of the measures they take, but especially the outcome. This is very effective in terms of enforcing the regulations. Because most tech companies in China are reliant on the domestic market for their revenue, they have relatively little leverage over the state to contest its measures. But it also leads to risk-averse behavior and over-censorship.

Generative AI Challenges the Framework

Although this general ideological and regulatory framework has remained consistent for the past few years, the revolution in generative AI (such as ChatGPT) might become its litmus test. Under the Chinese draft regulations, a tool like ChatGPT would be legally responsible as the “producer” of content instead of the people querying it. Among others, its content would have to be truthful and must not “disrupt the economic or social order.” But whether something is “truthful” depends on the context: a novel is fictional, yet becomes “fake” if it is presented as reality.

Another example: under normal circumstances, pictures of e.g., football fans celebrating their team would not be considered sensitive. But during the COVID-19 pandemic, the photos of mask-less celebrations in Qatar fueled frustration over the strict domestic lockdowns in China. In other words, something totally acceptable can become sensitive on a whim. It would be an immense challenge for AI companies to manage this risk.

Scholars in China have proposed several amendments to these draft rules, recommending that authorities require AI companies to take “sufficient measures” to guarantee accuracy instead of having to take full responsibility. It remains to be seen whether China’s regulators will agree with these proposals, given their focus on security above everything. So far, companies are finding their own ways to deal with the challenge: Some only cite domestic sources while others restrict the questions that can be asked. Especially the restrictions in data sources are likely to have a far-reaching impact on the quality of the model’s output.

China’s “Red” Algorithms in Europe?

TikTok is the elephant in the room of this discussion. Created by Chinese tech giant ByteDance, its algorithm is central to intense scrutiny by many European governments over influencing and data security concerns. So, could China’s framework for algorithms influence TikTok, too?

Principally, the regulations outlined above only apply to services provided within the People’s Republic of China (PRC), inherently excluding TikTok. Although TikTok and Douyin share most of their source code, it remains trivial to comply with PRC regulations without applying the same model to Europe – this is mostly a matter of tweaking parameters.

Concerns from conservative lawmakers in the US that TikTok’s algorithm feeds harmful content to children in the US that Douyin would never allow in China ironically only prove this. Causality is just inversed: TikTok is not purposely feeding harmful content to international users; the PRC is regulating things domestically that do not apply elsewhere. At the same time, this does certainly not plead TikTok free from its predatory business practices. Careful and reliable content moderation is expensive, and TikTok is clearly skimping on it. If Europe wishes to contend with challenges posed by TikTok and algorithms more broadly, perhaps Beijing’s vision for its algorithms could offer lessons on both the good and the bad. Just like Xi did in 2019, we need to think about the values algorithms should convey – beyond just economic profit. But unlike in China, we need to make sure these values are consistent with the democratic principles of individual liberty, accountability, and equality. The forthcoming EU AI Act will be the litmus test for Europe whether it can face up to this challenge.

Written by

Vincent Brussee

Vincent_WDBVincent Brussee is an Analyst at the Mercator Institute for China Studies (MERICS), where he focuses on China’s Social Credit System and regulation of the digital ecosystem.